The greatest example of this is Zeno's paradox. Zeno was a Greek philosopher in the 5th century B.C. He created several paradoxes, but I'm going to focus on the paradox Achilles and the tortoise. One day, the tortoise challenged Achilles to a race. Achilles, as you know, was a great and powerful Greek warrior. Surely he should have no problem racing a tortoise. But, knowing he is at a disadvantage, the tortoise asks for a head start of 10 meters. Achilles agrees, knowing he could recover from such a small disadvantage with ease. So, the race begins with the tortoise 10 meters ahead, inching along as tortoises do. Achilles bursts from the starting line like a locomotive. He covers the 10 meters in a few seconds. But by the time he does this, the tortoise has moved ahead, though only slightly. No matter. In a brief moment he covers that distance as well. But, in the time it took him to do this, however small, the tortoise has moved ahead still. And by the time he reaches where the tortoise is now, it will have moved ahead again. So it seems that he can never really catch the tortoise, as the tortoise will always be ahead.

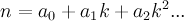

This is certainly a baffling result. I mean, no one in his right mind would claim that Achilles would lose to a tortoise. But then, what went wrong? What was wrong with our reasoning? In this case, the heart of the problem lies in dealing with infinite sums. Think about it. It takes Achilles a certain amount of time to run the first 10 meters, and in that time the tortoise will have moved a little bit ahead. It takes Achilles some time to cover the second distance, some time to cover the third distance and so on. Zeno is proposing that since there an infinite amount of periods of time, the total time taken (i.e. the sum) must itself be infinite. The problem with that, as modern mathematics has shown us, that simply isn't true. It turns out that since each period of time is smaller than the previous, it is possible that the sum of all of them is finite. Though, it is not guaranteed that just because the elements of the sum are decreasing, the infinite sum will be finite. See the harmonic series, for example.

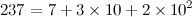

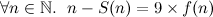

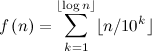

To see this in action, let's calculate how long it does take Achilles to reach the tortoise. First off, to make the math easier, let's say the tortoise was only 1 meter ahead and was traveling at 1 m/s and Achilles was traveling at 2 m/s. Achilles covers the first meter in 1/2 a second, but by that time the tortoise has moved 1/2 of a meter ahead of his previous position. Achilles will cover the next 1/2 meter in 1/4 of a second and the tortoise will move ahead 1/4 meter and so on. The periods of time it takes Achilles to cover the distances are 1/2 second, 1/4 second, 1/8 second, 1/16 second, and blah blah blah. So the total time until he passes the tortoise is 1/2 + 1/4 + 1/8... . But what does this sum up to? Let's take it bit by bit. 1/2 + 1/4 = 3/4. And 3/4 + 1/8 = 6/8 + 1/8 = 7/8. And 7/8 + 1/16 = 14/16 + 1/16 = 15/16. I'm sure you see the pattern. The denominator is always a power of two while the numerator is one less. It's obvious that this sum will not go off to infinity. In fact, the sum will never be greater than 1. But it will be getting closer and closer to 1 the more terms we add. Since we can get arbitrarily close to 1 but never exceed it, we can conclude it will take Achilles exactly 1 second to catch up to the tortoise.

And is exactly the concept known as a limit in mathematics. The understanding of limits is central to the formulation of calculus, and, as a result, everything calculus is used for. This is a rather long list, intersecting with every field of science. The solution of this paradox came with the knowledge to create the most revolutionary discovery to have ever existed. But, this is just one, of many paradoxes known today. Several of these have been explained thoroughly, but there are several more that have yet to be solved.

To see this in action, let's calculate how long it does take Achilles to reach the tortoise. First off, to make the math easier, let's say the tortoise was only 1 meter ahead and was traveling at 1 m/s and Achilles was traveling at 2 m/s. Achilles covers the first meter in 1/2 a second, but by that time the tortoise has moved 1/2 of a meter ahead of his previous position. Achilles will cover the next 1/2 meter in 1/4 of a second and the tortoise will move ahead 1/4 meter and so on. The periods of time it takes Achilles to cover the distances are 1/2 second, 1/4 second, 1/8 second, 1/16 second, and blah blah blah. So the total time until he passes the tortoise is 1/2 + 1/4 + 1/8... . But what does this sum up to? Let's take it bit by bit. 1/2 + 1/4 = 3/4. And 3/4 + 1/8 = 6/8 + 1/8 = 7/8. And 7/8 + 1/16 = 14/16 + 1/16 = 15/16. I'm sure you see the pattern. The denominator is always a power of two while the numerator is one less. It's obvious that this sum will not go off to infinity. In fact, the sum will never be greater than 1. But it will be getting closer and closer to 1 the more terms we add. Since we can get arbitrarily close to 1 but never exceed it, we can conclude it will take Achilles exactly 1 second to catch up to the tortoise.

And is exactly the concept known as a limit in mathematics. The understanding of limits is central to the formulation of calculus, and, as a result, everything calculus is used for. This is a rather long list, intersecting with every field of science. The solution of this paradox came with the knowledge to create the most revolutionary discovery to have ever existed. But, this is just one, of many paradoxes known today. Several of these have been explained thoroughly, but there are several more that have yet to be solved.

One of the most famous paradoxes today is known as the Liar Paradox. It's been stated in many different forms, but the simplest is probably :

This statement is false.

This statement is false.

You can easily see something is wrong here. Is the statement true or false? If we assume it's true, it must be correct in saying that it's false, and, thus, must be false, which is a contradiction. If we assume it's false, we can see it is correct when it says it's false, and must be true, which is also a contradiction.

When I first came across this, I thought it was simple. If it can't be false, and it can't be true, why not just call it neither, or "null"? Easy, right? Then someone made the statement:

This statement is false or null.

Well, this is a problem. If the statement is false, it succumbs to the paradox as the original. And now it can't even be null because then it would actually be true. There is still a contradiction.

So, we should be cautious. We don't know if our "solutions" result in new paradoxes. These things take lots of thought.

There have been a few proposed solutions to this problem, all of which have their merits and shortcomings. I personally haven't reached a conclusion that seems completely satisfactory. None the less, investigating this paradox and paradoxes like these is always a fun experience. Who knows, one may just arrive at indisputable evidence on the inconsistency of logic. (But probably not)

When I first came across this, I thought it was simple. If it can't be false, and it can't be true, why not just call it neither, or "null"? Easy, right? Then someone made the statement:

This statement is false or null.

Well, this is a problem. If the statement is false, it succumbs to the paradox as the original. And now it can't even be null because then it would actually be true. There is still a contradiction.

So, we should be cautious. We don't know if our "solutions" result in new paradoxes. These things take lots of thought.

There have been a few proposed solutions to this problem, all of which have their merits and shortcomings. I personally haven't reached a conclusion that seems completely satisfactory. None the less, investigating this paradox and paradoxes like these is always a fun experience. Who knows, one may just arrive at indisputable evidence on the inconsistency of logic. (But probably not)